When we talk of artificial intelligence (AI), we mainly mean adaptive computer programs, i.e. those with the capacity to learn. These are trained on a vast pool of data and spot certain regularities within it. “Methods like these can make it easier to work through tasks that would otherwise be very time-consuming and labor-intensive,” explains Dr. Moritz Wolter, a computer scientist at the University of Bonn. “So there are a great many fields of research that could benefit enormously. We’re trying to help them out with our expertise.”

Wolter is one of the coordinators of the BNTrAinee project. Run by the Digital Science Center at the University of Bonn, it is geared toward driving forward this interdisciplinary collaboration and has secured an impressive €1.99 million in funding from the BMBF over the past two years. “The project is teaming up students with researchers who have a problem they want to solve using AI,” says the computer scientist, who is also a member of the Transdisciplinary Research Area (TRA) “Modelling” at the University of Bonn.

A win-win situation

Thinking outside the box in this way benefits both sides in equal measure. “Our students have to code software for their degree anyway,” Dr. Elena Trunz, also coordinator of BNTrAInee, says. “BNTrAinee allows them to do so as part of a real-life research project, with the added satisfaction that the fruits of their labor will actually get used afterward. In turn, the users—i.e. the researchers and their students—find out how they can apply AI and machine learning to their own projects to their advantage. At the same time, they see where their limits are.”

Both sides are also learning to speak a common language. The up-and-coming computer scientists first need to understand precisely what specific issue AI is supposed to help with, while their clients learn what data the algorithms require for this purpose and how it has to be structured. They are also given training in the mechanics of learnable methods, which covers some of the issues tackled recently by AI researchers, such as the question of what basis the methods use to draw their conclusions in the first place. This is because many algorithms are a kind of “black box”—they supply results, but it is unclear how they arrive at them. This makes it harder to gauge how reliably they are actually working.

How do financial crises influence “situation wanted” ads?

Someone who has high hopes of adaptive algorithms is Dr. Felix Selgert. One of the questions that the economic historian is investigating is how economic turmoil, such as that experienced during the hyperinflation of 1923, is reflected in the press. “For instance, I’m interested in what conclusions about the mood in society you can draw from newspaper articles,” he says. “My research is also focusing on analyzing ads, such as job and promotional ads.”

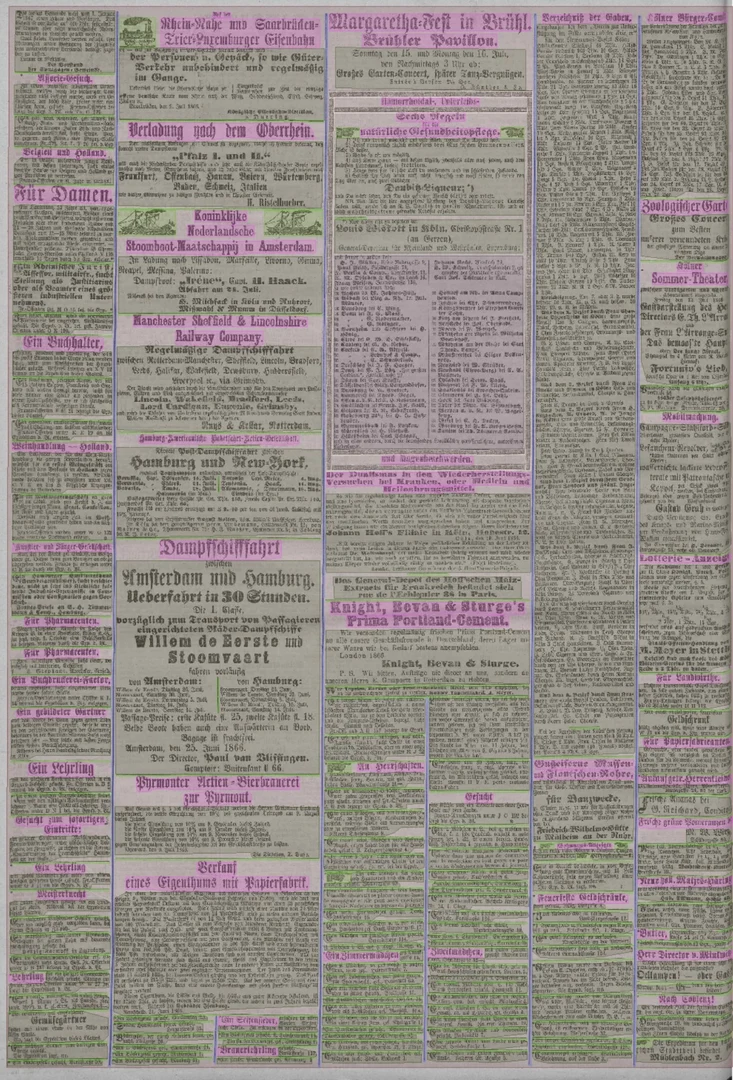

His problem is the sheer quantity of material that he would need to go through. At some points during the 1920s, for instance, the “Kölnische Zeitung” newspaper alone was being printed several times a day, 365 days a year. Even transcribing the editions from a single year, i.e. creating a digital copy, would mean thousands of hours’ work. “Traditional optical character recognition software offers little help with that, unfortunately,” he says. “It has massive problems with the layout, among other things.”

This is because paper was hard to come by in those days, so the newsprint was packed tightly on the page, and thin lines instead of blank space were used as column separators. “Normal” computer programs often miss these separators. “This causes them to amalgamate two articles that are next to each other, for instance,” Selgert says. They also have difficulties identifying headlines or subheadlines correctly and recognizing what story they belong to.

Computer science students have therefore developed an AI application that detects the layout of a page and splits it into its constituent elements. The next step will be to use another piece of self-learning software for character recognition, although this is still being developed. “The ultimate aim is for the AI to capture the full text of all articles and other elements in a scanned-in edition and categorize everything automatically,” adds Selgert, who is also a member of the Individuals and Societies Transdisciplinary Research Area at the University of Bonn. “But there’s a long way to go until we get to that point.”

Better cancer diagnosis, more data protection

The project is just one example of how AI algorithms are being tailored to some highly specific research questions. For instance, computer science students at the University and radiologists at the University Hospital Bonn want to help improve cancer diagnosis and are thus developing techniques for analyzing microscope images of removed tissue. Another project, meanwhile, is all about tightening data protection. Biologists often record animal sounds to get an idea of how biodiverse a particular area is. Sometimes, their recordings also capture “background noise” in the form of people’s conversations, so an algorithm is to be used to identify the relevant snippets and remove them automatically.