Until now, researchers only had limited and costly methods available for behavioral experiments to detect and control behavior. "With the help of AI-assisted animal body posture recognition developed in previous years, it has recently become possible to determine the temporal and spatial sequence of movements more precisely," explains study leader Dr. Martin Schwarz of the Institute of Experimental Epileptology and Cognition Research (EECR) at University Hospital Bonn. He is a member of the Transdisciplinary Research Area (TRA) "Life and Health" at the University of Bonn, which is one of six inter-faculty alliances in which researchers from different disciplines come together to work on future-relevant research topics at the University of Excellence. One of the TRA’s main topics is to support research at the nexus point of biomedicine and artificial intelligence.

Observing moving mice

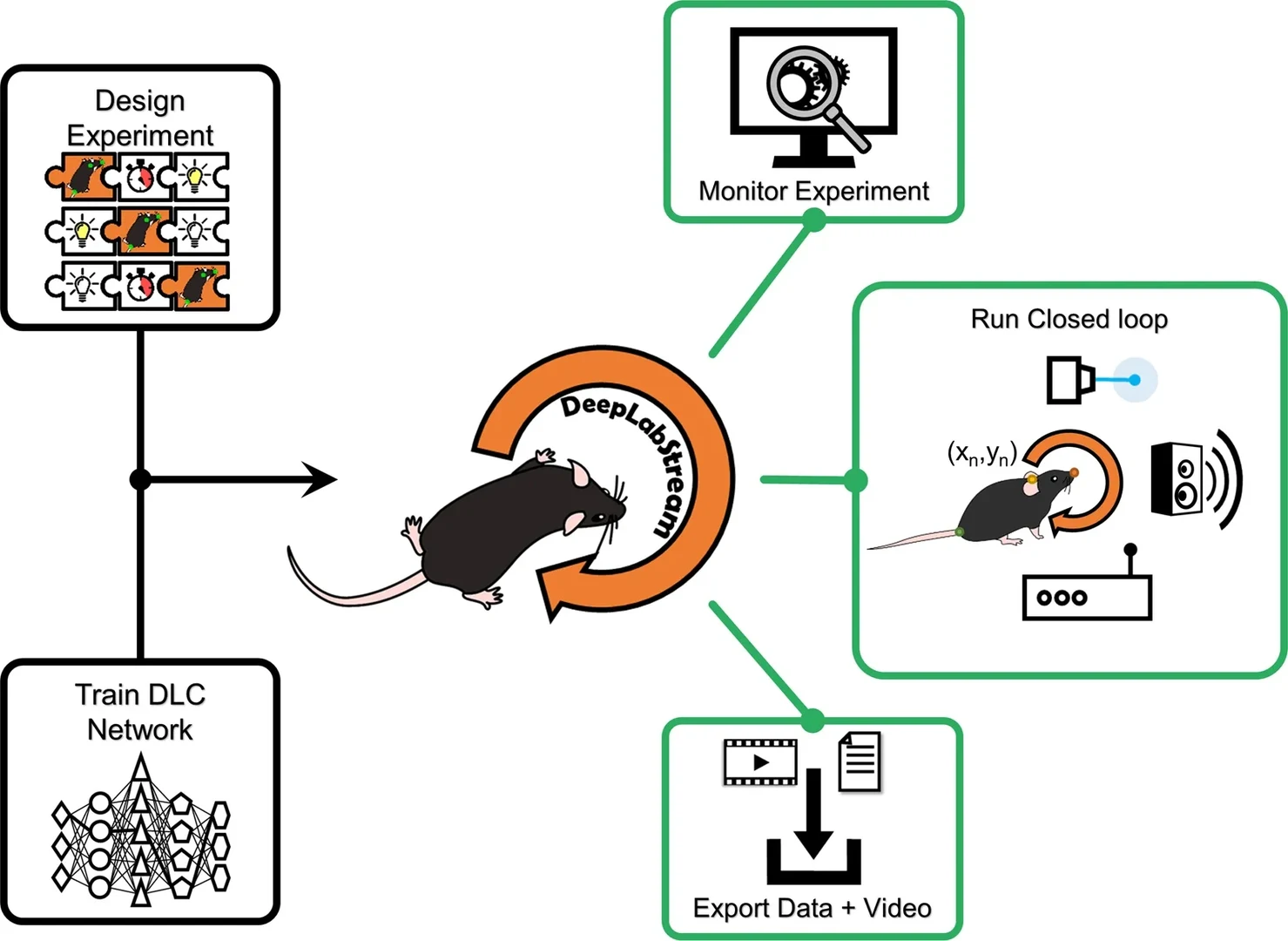

To test the method known as DeepLabStream (DLStream), the researchers observed freely moving mice in a box. The mice were conditioned using DLStream to move to a corner of the box within a set period of time when viewing a specific image to collect a reward. Cameras captured the animals' movements, and the system automatically created logs of the movements.

In another experiment, the scientists demonstrated a special feature of the new system: Depending on the head tilt, the researchers were able to label the corresponding neuronal networks. To do this, they used certain proteins that were only activated in the brain at specific head inclinations when exposed to light and color-coded the underlying neuronal networks. The protein system is called Cal-Light. If the corresponding head-tilt-dependent neuronal networks were not activated, a corresponding marker failed to appear, which clarified the causal relationship. "We were able to show that, for the first time, active neuronal circuits can be permanently labeled and subsequently manipulated during selected behavioral episodes using this method," emphasizes Jens Schweihoff, who conducted the study as part of his doctoral thesis at University Hospital Bonn.

Real-time behavior detection

Until now, a limiting factor in the applicability of Cal-Light was that complex behavioral episodes could not be accurately detected in real time. The new method DLStream now has a temporal resolution in the millisecond range. "Since DLStream enables real-time behavior detection, the available range of applications for the Cal-Light method is significantly expanded, which makes it possible to conduct automated, behavior-dependent manipulation during ongoing experiments," stresses Jens Schweihoff. "We believe that using this combination of methods, researchers can now better study the causal relationships between behavior and the underlying neuronal networks."

The method has so far been tested in behavioral experiments on mice, but the researchers expect that it can be applied to other animals in the future without restrictions.

The researchers believe that the new method can significantly increase the reproducibility and effectiveness of experiments, since the important behavioral parameters, for example the position, speed and posture of the experimental animal, can be calculated with high temporal resolution and accuracy. This significantly reduces the variability, i.e. the difference in measured parameters, between individual experiments.

The software is now accessible to researchers worldwide via an open-source platform, which should enable them to conduct and automate a wide range of behavioral experiments with AI support.

DeepLabStream as an open source application: https://github.com/SchwarzNeuroconLab/DeepLabStream1

Funding:

The study received financial support from the German Research Foundation within the Collaborative Research Center 1089 "Synaptic Micronetworks in Health and Disease", the Priority Program 2041 "Computational Connectomics" and the Volkswagen Foundation.

Publication: Jens F. Schweihoff, Matvey Loshakov, Irina Pavlova, Laura Kück, Laura A. Ewell & Martin K. Schwarz: DeepLabStream enables closed-loop behavioral experiments using deep learning-based markerless, real-time posture detection. Communications Biology, DOI: 10.1038/s42003-021-01654-9

https://www.nature.com/articles/s42003-021-01654-92

Contact:

Dr. Martin K. Schwarz

Functional Neuroconnectomics group

Institute of Experimental Epileptology and Cognition Research (EECR) and

Life & Brain Center

University of Bonn, University Hospital Bonn

Phone: +228-28719-266

Email: Martin.Schwarz@ukbonn.de